Integrating OpenAI’s Whisper API into your application allows you to convert spoken language into written text efficiently and accurately. By connecting Whisper’s speech recognition capabilities, your app can perform real-time or batch audio to text transcription, unlocking powerful features such as automated note-taking, caption generation, and content analysis.

What Is Whisper API and Why Integrate It?

Whisper API is an advanced speech-to-text service developed by OpenAI. It supports multiple languages and dialects, providing high-accuracy transcriptions even in noisy environments. Integrating Whisper API gives your application the ability to handle audio to text tasks with minimal setup, improving user experience and expanding functionality.

ChatGPT cannot directly transcribe audio into text, but this can be achieved by using APIs.

You can integrate Whisper API and ChatGPT’s capabilities to create a complete workflow from audio transcription to summarization.

Step-by-Step Guide to Integrate Whisper API

Here’s a clear, step-by-step guide for how to use the Whisper API so you can integrate speech-to-text into your workflow with ChatGPT or other tools.

1. Get API Access

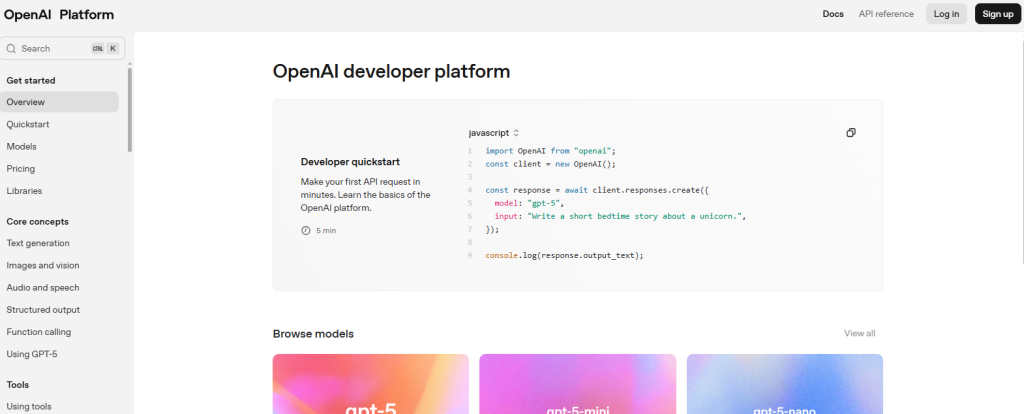

- Sign up for an OpenAI account at https://platform.openai.com.

- Go to your account dashboard and generate an API key.

- Keep this key private — it’s what your scripts or apps will use to connect to OpenAI’s Whisper service.

2. Install the OpenAI SDK

If you’re using Python, install the official SDK:

pip install openai

Or for Node.js:

npm install openai3. Prepare Your Audio File

- Supported formats include MP3, WAV, M4A, MP4, and more.

- Make sure your recording is clear, with minimal background noise.

4. Call the Whisper API (Python Example)

import openai

openai.api_key = "YOUR_API_KEY"

audio_file = open("meeting_audio.mp3", "rb")

transcript = openai.Audio.transcriptions.create(

model="whisper-1",

file=audio_file

)

print(transcript.text)

5. Call the Whisper API (Node.js Example)

import OpenAI from "openai";

import fs from "fs";

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

const transcription = await openai.audio.transcriptions.create({

file: fs.createReadStream("meeting_audio.mp3"),

model: "whisper-1"

});

console.log(transcription.text);

6. Process the Transcript

Once Whisper returns the transcription:

Store it as meeting notes, blog content, or captions.

Feed it into ChatGPT for summarization, translation, or formatting.

Using Whisper API for Video Content Transcription

Many applications also require converting spoken words from video files. By extracting the audio track from video, you can leverage Whisper API for video to text transcription. This enables your app to provide video captioning, searchable video archives, and enhanced accessibility features.

Best Practices for Accurate Audio and Video Transcription

- Use clear audio recordings with minimal background noise.

- Support popular audio and video file formats to maximize compatibility.

- Implement error handling for API rate limits and unexpected responses.

- Allow users to review and edit transcriptions to ensure accuracy.

Popular Use Cases of Whisper API Integration

- Meeting and Conference Transcripts for quick summaries and follow-ups.

- Podcast Transcriptions to improve content discoverability and SEO.

- Customer Support Call Logs for quality assurance and training.

- Video Captioning to comply with accessibility standards.

Limitations and Considerations

While Whisper API offers impressive transcription capabilities, it is essential to consider:

- The transcription quality depends heavily on audio clarity.

- Real-time streaming transcription may require additional infrastructure.

- Usage costs can increase with high-volume transcription needs.

Final Thoughts

Integrating Whisper API into your application is a powerful way to add speech recognition and transcription features. By supporting both audio to text and video to text workflows, Whisper API empowers your app to handle diverse multimedia content effectively, enhancing user engagement and accessibility.